Frequency probability concept, how it is calculated and examples

The frequency probability is a sub-definition within the study of probability and its phenomena. His study method with respect to events and attributes is based on large amounts of iterations, thus observing the trend of each one in the long term or even at infinite repetitions..

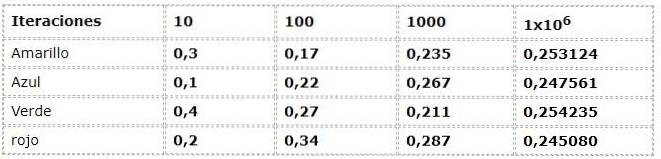

For example, an envelope of gummies contains 5 erasers of each color: blue, red, green and yellow. We want to determine the probability that each color has to come out after a random selection.

It is tedious to imagine taking out a rubber, registering it, returning it, taking out a rubber and repeating the same thing several hundred or several thousand times. You may even want to observe the behavior after several million iterations.

But on the contrary, it is interesting to discover that after a few repetitions the expected probability of 25% is not fully met, at least not for all colors after 100 iterations have occurred..

Under the approach of the frequency probability, the assignment of the values will only be through the study of many iterations. In this way, the process must be carried out and registered preferably in a computerized or emulated way..

Multiple currents reject the frequency probability, arguing lack of empiricism and reliability in the randomness criteria.

Article index

- 1 How is the frequency probability calculated?

- 1.1 Law of large numbers

- 2 Other approaches to probability

- 2.1 Logical theory

- 2.2 Subjective theory

- 3 History

- 3.1 Mass phenomena and repetitive events

- 3.2 Attributes

- 4 Example

- 4.1 References

How is the frequency probability calculated?

By programming the experiment in any interface capable of offering a purely random iteration, one can begin to study the frequency probability of the phenomenon using a table of values.

The previous example can be seen from the frequency approach:

The numerical data correspond to the expression:

N (a) = Number of occurrences / Number of iterations

Where N (a) represents the relative frequency of the event "a"

"A" belongs to the set of possible outcomes or sample space Ω

Ω: red, green, blue, yellow

A considerable dispersion is appreciated in the first iterations, when observing frequencies with up to 30% differences between them, which is a very high data for an experiment that theoretically has events with the same possibility (Equiprobable).

But as the iterations grow, the values seem to adjust more and more to those presented by the theoretical and logical current.

Law of the big numbers

As an unexpected agreement between the theoretical and frequency approaches arises the law of large numbers. Where it is established that after a considerable number of iterations, the values of the frequency experiment are approaching the theoretical values.

In the example you can see how the values approach 0.250 as the iterations grow. This phenomenon is elementary in the conclusions of many probabilistic works.

Other approaches to probability

There are 2 other theories or approaches to the notion of probability in addition to the frequency probability.

Logical theory

His approach is oriented to the deductive logic of phenomena. In the previous example the probability of obtaining each color is 25% in a closed way. In other words, their definitions and axioms do not contemplate lags outside their range of probabilistic data..

Subjective theory

It is based on the knowledge and prior beliefs that each individual has about the phenomena and attributes. Statements such as “It always rains at Easter " They are due to a pattern of similar events that have occurred previously.

Story

The beginnings of its implementation date from the 19th century, when Venn cited it in several of his works in Cambridge England. But it was not until well into the twentieth century that 2 statistical mathematicians developed and shaped the frequency probability.

One of them was Hans Reichenbach, who develops his work in publications such as "The Theory of Probability" published in 1949.

The other was Richard Von Mises, who further developed his work through multiple publications and proposed considering probability as a mathematical science. This concept was new to mathematics and would usher in an era of growth in the study of mathematics. frequency probability.

Actually this event marks the only difference with the contributions made by the Venn, Cournot and Helm generation. Where probability becomes homologous to sciences such as geometry and mechanics.

< La teoría de las probabilidades trata con massive phenomena and repetitive events. Problems in which either the same event is repeated over and over, or a large number of uniform elements are involved at the same time> Richard Von Mises

Massive phenomena and repetitive events

Three types can be classified:

- Physical: they obey patterns of nature beyond a condition of randomness. For example the behavior of the molecules of an element in a sample.

- Chance - Your primary consideration is randomness, such as rolling a die repeatedly.

- Biological statistics: selections of test subjects according to their characteristics and attributes.

In theory, the individual who measures plays a role in the probabilistic data, because it is their knowledge and experiences that articulate this value or prediction..

In the frequency probability the events will be considered as collections to be treated, where the individual does not play any role in the estimation.

Attributes

An attribute occurs in each element, which will be variable according to its nature. For example, in the type of physical phenomenon, the water molecules will have different speeds..

In the roll of the dice we know the sample space Ω that represents the attributes of the experiment.

Ω: 1, 2, 3, 4, 5, 6

There are other attributes such as being even ΩP or be odd ΩI

Ωp : 2, 4, 6

ΩI : 1, 3, 5

Which can be defined as non-elemental attributes.

Example

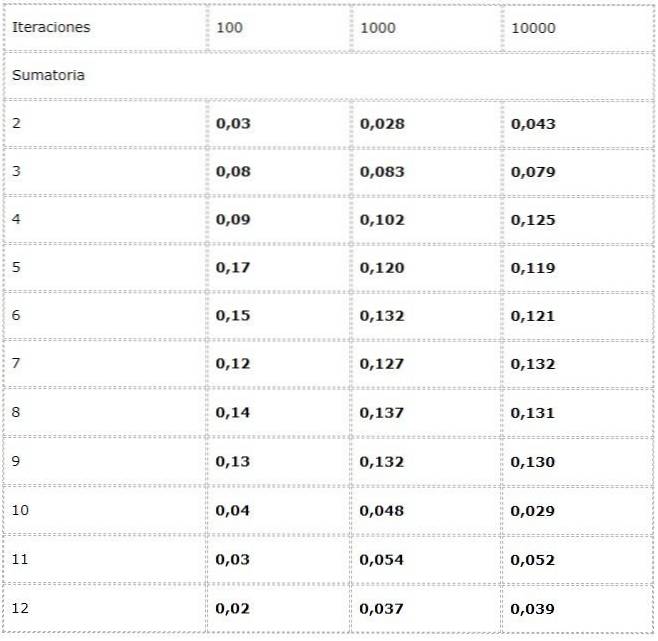

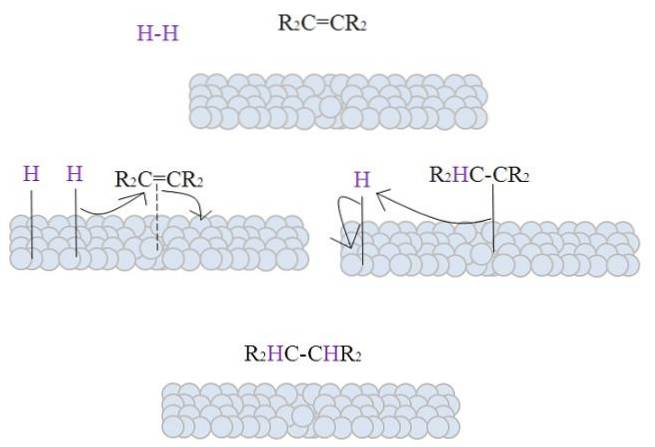

- We want to calculate the frequency of each possible summation in the throwing of two dice.

For this an experiment is programmed where two sources of random values between [1, 6] are added in each iteration.

Data is recorded in a table and trends in large numbers are studied.

It is observed that the results can vary considerably between the iterations. However, the law of large numbers can be seen in the apparent convergence presented in the last two columns.

References

- Statistics and the Evaluation of Evidence for Forensic Scientists. Second Edition. Colin G.G. Aitken. School of Mathematics. The University of Edinburgh, UK

- Mathematics for Computer Science. Eric Lehman. Google Inc.

F Thomson Leighton Department of Mathematics and the Computer Science and AI Laboratory, Massachusetts Institute of Technology; Akamai Technologies - The Arithmetic Teacher, Volume 29. National Council of Teachers of Mathematics, 1981. University of Michigan.

- Learning and teaching number theory: Research in cognition and instruction / edited by Stephen R. Campbell and Rina Zazkis. Ablex publishing 88 Post Road West, Westport CT 06881

- Bernoulli, J. (1987). Ars Conjectandi- 4ème partie. Rouen: IREM.

Yet No Comments