What does Computer Science study?

The computing It is a modern science that studies the methods, processes and technique to process, transmit or save data in a digital way. With the great advance of technology from the second half of the 20th century, this discipline was gaining importance in productive activities, at the same time increasing its specificity..

The development of computers, closed circuits, robots, machinery, mobile phones and the emergence of the internet, make computing one of the most popular sciences in recent decades..

The etymology of the word computing has several possible origins. It mainly arose as an acronym for the words information and automatique (automatic information).

In 1957 it was Karl Steinbuch, who included this term in a document called Informatik: Automatische Informationsverarbeitung. While in 1962, the French engineer Philippe Dreyfus named his company as Société d'Informatique Appliquée. However, the Russian Alexander Ivanovich Mikhailov was the first to use this term as the "study, organization, and dissemination of scientific information".

Among its vast field of application, this science is dedicated to the study of automatic information processing using electronic devices and computer systems, which can be used for different purposes..

What does computer science study? Applications

The field of application of computing has broadened its spectrum with technological development in the last half century, especially due to the impulse of computers and the Internet..

Its main tasks include the design, development, planning of closed circuits, document preparation, monitoring and process control..

It is also responsible for the creation of industrial robots, as well as tasks related to the vast field of telecommunications and the creation of games, applications and tools for mobile devices..

Conformation of computing

Computer science is a science in which knowledge and knowledge from various disciplines converge, starting with mathematics and physics, but also computing, programming and design, among others..

This synergistic union between different branches of knowledge is complemented in computing with the notions of hardware, software, telecommunications, internet and electronics..

Story

The history of computing began long before the discipline that bears its name. It accompanied humanity almost from its origins, although without being recognized as a science.

Since the creation of the Chinese abacus, recorded in 3000 BC and considered the first calculation device of mankind, we can talk about computing.

This table divided into columns, allowed through the movements of its units to perform mathematical operations such as addition and subtraction. There could be the starting point of this science.

But the evolution of computing had only just begun with the abacus. In the 17th century, Blaise Pascal, one of the most renowned French scientists of his time, created the calculating machine and pushed a further evolutionary step.

This device was only used for additions and subtractions but it was the basis for the German Leibniz, almost 100 years later, in the 18th century, to develop a similar apparatus but with multiplications and divisions..

These three creations were the first computerized processes on record. It took almost 200 more years for this discipline to gain relevance and become a science.

In the early decades of the 20th century, the development of electronics was the final push for modern computing. From there, this branch of science begins to solve technical problems arising from new technologies.

At this time there was a change from systems based on gears and rods to the new processes of electrical impulses, classified by a 1 when the current passes and by a 0 when it does not, which revolutionized this discipline.

The final step was taken during World War II with the making of the first computer, the Mark I, which opened up a new field of development that is still expanding..

Basic notions of computer science

Informatics, understood as the automatic processing of information through electronic devices and computer systems, must have some capabilities to be able to develop.

Three central operations are fundamental: entry, which refers to the capture of information; the processing of the same information and the output, which is the possibility of transmitting results.

The set of these capabilities of electronic devices and computer systems is known as an algorithm, which is the ordered set of systematic operations to perform a calculation and find a solution.

Through these processes, computing developed various types of devices that began to facilitate the tasks of humanity in all kinds of activities.

Although its area of application does not have strict limits, it is mainly used in industrial processes, business management, information storage, process control, communications, transportation, medicine and education..

Generations

Within computing and computing, one can speak of five generations of processors that marked modern history from its emergence in 1940 to the present.

First generation

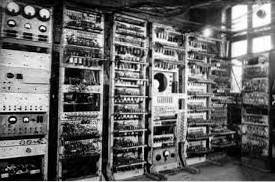

The first generation had its development between 1940 and 1952, when computers were built and operated with valves. Its evolution and utility was fundamentally in a scientific-military field..

These devices had mechanical circuits, whose values were modified to be able to be programmed according to the necessary purposes..

Second generation

The second generation was developed between 1952 and 1964, with the appearance of transistors that replaced the old tubes. Thus arose the commercial devices, which had a previous programming.

Another central fact of this stage is the appearance of the first codes and programming languages, Cobol and Fortran. Years later, new ones followed.

Third generation

The third generation had a development period a little shorter than that of its predecessors, it lasted between 1964 and 1971 when integrated circuits appeared.

The lower costs in the production of the devices, the increase in the storage capacity and the reduction in the physical size, marked this stage.

In addition, thanks to the development of programming languages, which gained in specificity and skills, the first utility programs began to flourish..

Fourth generation

The fourth generation was produced since 1971 and lasted for a decade, until 1981, with electronic components as the main protagonists of the evolution.

This is how the first microprocessors began to appear in the computer world, which included all the basic elements of the old computers in a single integrated circuit..

Fifth generation

Finally, the fifth generation began in 1981 and extends to the present, in which technology pervades every aspect of modern societies..

The main development of this evolutionary phase of computing was personal computers (PC), which later led to a vast group of associated technologies that today rule the world..

References

- Informattica, information, and communication, Social Documentation: Journal of Social Studies and Applied Technology ', 1999.

- Information Processing (Automatic), Diego Dikygs, digital site Notions of Computing, 2011.

- Computer Historyn, Patricio villalva.

- Magazine Horizonte Inform MagazinetoEducational Ethics, Buenos Aires, 1999.

Yet No Comments