Computer generations phases and characteristics

The generations of computers from the beginning of its use to the present there are six, although some authors put them at only five. The history of these computer machines began in the 40s of the 20th century, while the last one is still developing today.

Before the 1940s, when ENIAC, the first electronic digital computer, was developed, there had been some attempts to create similar machines. Thus, in 1936, the Z1 was introduced, which for many is the first programmable computer in history..

In computer terminology, the generation change occurs when significant differences appear in the computers that were being used up to that time. At first, the term was only used to distinguish between differences in hardware, but now it also refers to software.

The history of computers ranges from those that occupied an entire room and did not have an operating system to the studies that are being carried out to apply quantum technology. Since their invention, these machines have been reducing their size, incorporating processors and greatly increasing their capabilities..

Article index

- 1 First generation

- 1.1 History

- 1.2 Features

- 1.3 Main models

- 2 Second generation

- 2.1 History

- 2.2 Features

- 2.3 Main models

- 3 Third generation

- 3.1 History

- 3.2 Features

- 3.3 Main models

- 4 Fourth generation

- 4.1 History

- 4.2 Characteristics

- 4.3 Main models

- 5 Fifth generation

- 5.1 History

- 5.2 Features

- 5.3 Main models

- 6 6th generation

- 6.1 History and characteristics

- 6.2 Quantum computing

- 6.3 Featured Models

- 7 References

First generation

The first generation of computers, the initial one, spread between 1940 and 1952, in the context of the Second World War and the beginning of the Cold War. At this time the first automatic calculation machines appeared, based on vacuum tubes and valve electronics..

The experts of the time did not trust too much in the extension of the use of the computers. According to their studies, only 20 of them would saturate the United States market in the field of data processing.

Story

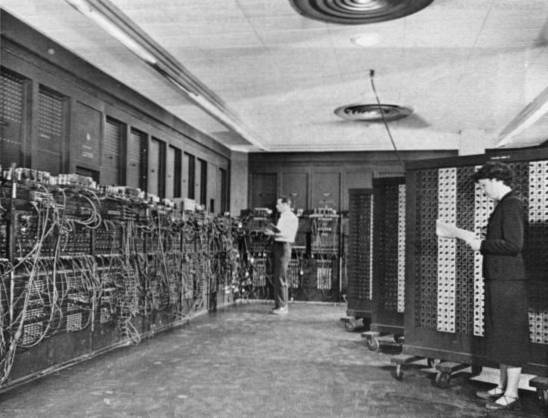

Although the first computer was the German Z1, ENIAC, short for Electronic Numerical Integrator and Computer, is generally considered to be the one that marked the beginning of the first generation of this type of machine..

The ENIAC was a completely digital computer, so all its processes and operations were executed using machine language. It was presented to the public on February 15, 1946, after three years of work.

By that time, World War II had already ended, so the goal of computer research ceased to be completely focused on the military aspect. From that moment on, it was sought that computers could meet the needs of private companies.

Subsequent investigations resulted in ENIAC's successor, EDVAC (Electronic Discrete Variable Automatic Computer).

The first computer to reach the general market was Saly, in 1951. The following year, UNIVAC was used in the counting of votes in the US presidential elections: only 45 minutes were needed to obtain the results..

Characteristics

Early computers used vacuum tubes for circuits, as well as magnetic drums for memory. The teams were huge, to the point of occupying entire rooms.

This first generation needed a large amount of electricity to function. This not only made it more expensive to use, but also caused a huge generation of heat that caused specific failures..

The programming of these computers was done in machine language and they could only get one program to be solved at a time. At those times, each new program needed days or weeks to install. The data, meanwhile, were entered with punched cards and paper tapes.

Main models

As noted, the ENIAC (1946) was the first electronic digital computer. It was, in reality, an experimental machine that could not be a program as it is understood today.

Its creators were engineers and scientists from the University of Pennsylvania (USA), led by John Mauchly and J. Presper Eckert. The machine took up the entire basement of the university and weighed several tons. In full operation it could perform five thousand sums in one minute.

The EDVA (1949) was already a programmable computer. Although it was a laboratory prototype, this machine had a design with some ideas present in today's computers.

The first commercial computer was the UNIVAC I (1951). Mauchly and Eckert created Universal Computer, a company that introduced the computer as its first product..

Although IBM had already introduced some models before, the IBM 701 (1953) was the first to become a success. The following year, the company introduced new models that added a magnetic drum, a mass storage mechanism..

Second generation

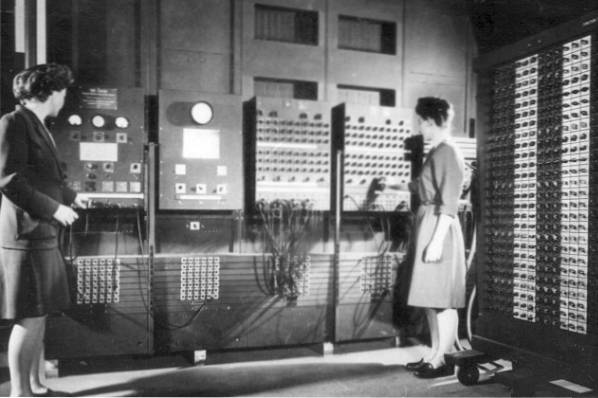

The second generation, which began in 1956 and lasted until 1964, was characterized by the incorporation of transistors to replace vacuum tubes. With this, computers reduced their size and their electricity consumption.

Story

The invention of the transistor was fundamental to the generation change in computers. With this element, the machines could be made smaller, in addition to requiring less ventilation. Despite that, the cost of production was still very high.

The transistors offered much better performance than the vacuum tubes, something that also caused the computers to have fewer failures.

Another great advance that took place at this time was the improvement of the programming. In this generation, COBOL appeared, a computer language that, when it was commercialized, represented one of the most important advances in terms of program portability. This meant that each program could be used on multiple computers..

IBM introduced the first magnetic disk system, called RAMAC. Its capacity was 5 megabytes of data.

One of the most important customers for these second-generation computers was the United States Navy. As an example, they were used to create the first flight simulator.

Characteristics

In addition to the breakthrough that transistors represented, the new computers also incorporated networks of magnetic cores for storage.

For the first time, computers could store instructions in their memory.

These teams allowed machine language to be left behind to start using assembly or symbolic languages. Thus appeared the first versions of FORTRAN and COBOL.

The 1951 invention of microprogramming by Maurice Wilkes meant that the development of CPUs was simplified.

Main models

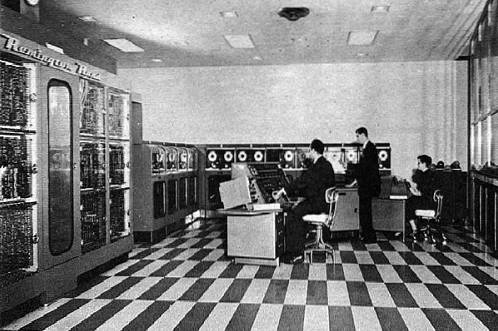

Among the models that appeared in this generation, the IBM 1041 Mainframe stood out. Although expensive and bulky by today's standards, the company managed to sell 12,000 units of this computer..

In 1964, IBM introduced its 360 series, the first computers whose software could be configured for different combinations of capacity, speed and price..

The System / 360, also designed by IBM, was another best seller in 1968. Designed for individual use, some 14,000 units were sold. Its predecessor, the System / 350, had already included multiprogramming, new languages and input and output devices.

Third generation

The invention of the chip or closed circuit by the Americans Jack S. Kilby and Robert Noyce revolutionized the development of computers. Thus began the third generation of these machines, which ran from 1964 to 1971.

Story

The appearance of integrated circuits was a revolution in the field of computers. Processing capacity increased and manufacturing costs decreased..

These circuits or chips were printed on silicon tablets to which small transistors were added. Its implementation represented the first step towards the miniaturization of computers.

In addition, these chips allowed the use of computers to be more comprehensive. Until then, these machines were designed for mathematical applications or for business, but not for both fields. The chips made it possible to make the programs more flexible and the models to be standardized.

It was IBM that launched the computer that started this third generation. Thus, on April 7, 1964, he presented the IBM 360, with SLT technology..

Characteristics

From this generation on, the electronic components of computers were integrated into a single piece, the chips. Inside these, capacitors, gods and transistors were placed that allowed to increase the charging speed and reduce energy consumption..

In addition, the new computers gained in reliability and flexibility, as well as multiprogramming. Peripherals were modernized and minicomputers appeared with a much more affordable cost.

Main models

The launch of the IBM 360 by that company was the event that ushered in the third generation. Its impact was so great that more than 30,000 units were manufactured.

Another prominent model of this generation was the CDC 6600, built by Control Data Corporation. At the time, this computer was considered the most powerful manufactured, since it was configured to execute 3,000,000 instructions per second..

Finally, among the minicomputers, the PDP-8 and PDP-11 stood out, both endowed with a large processing capacity.

Fourth generation

The next generation of computers, between 1971 and 1981, featured personal computers. Little by little, these machines began to reach homes.

Story

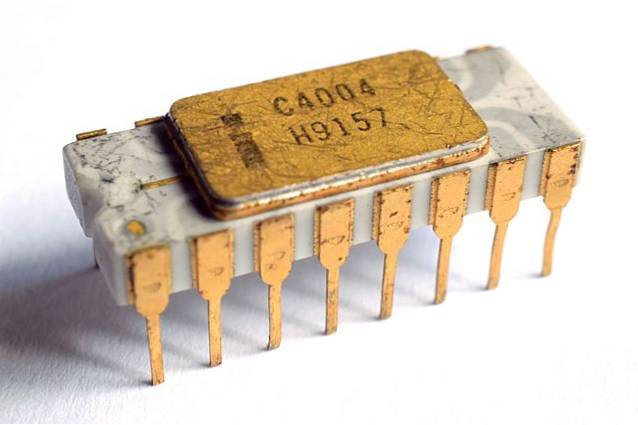

The thousands of integrated circuits within a single silicon chip allowed microprocessors to appear, the main protagonists of the fourth generation of computers. The machines that filled a room in the 1940s were reduced in size until they only needed a small table.

On a single chip, as in the case of the Intel 4004 (1971), all the fundamental components could fit, from the memory unit and central processing to the input and output controls.

This great technological advance gave as its main fruit the appearance of personal computers or PC.

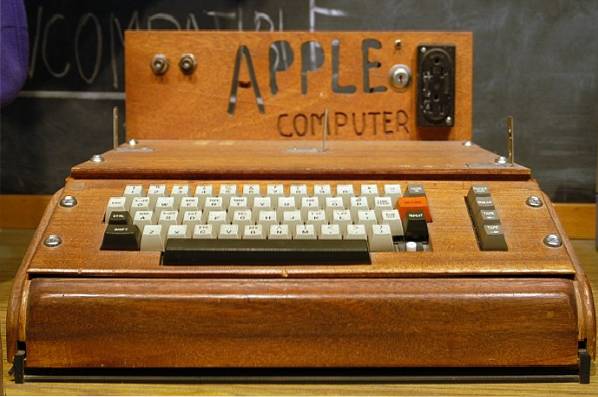

During this stage, one of the most important companies in the field of computing was born: APPLE. Its birth came after Steve Wozniak and Steve Jobs invented the first mass-use microcomputer in 1976.

IBM introduced its first computer for home use in 1981 and APPLE released the Macintosh three years later. Processing power and other technological advances were key for these machines to begin to connect with each other, which would end up giving rise to the internet.

Other important elements that appeared in this phase were the GUI, the mouse and the handheld devices..

Characteristics

In this fourth generation, memories with magnetic cores were replaced by those of silicon chips. In addition, the miniaturization of the components allowed many more to be integrated within those chips..

In addition to PCs, in this phase so-called supercomputers were also developed, capable of performing many more operations per second.

Another characteristic of this generation was the standardization of computers, especially PCs. In addition, the so-called clones began to be manufactured, which had a lower cost without losing functionalities.

As noted, downsizing was the most important feature of the fourth generation of computers. In large part, this was achieved through the use of VLSI microprocessors.

The prices of computers began to fall, allowing them to reach more households. Elements such as the mouse or the graphical user interface made the machines easier to use.

Processing power also saw a large increase, while power consumption was further reduced..

Main models

This generation of computers was distinguished by the appearance of numerous models, both PC and clones.

On the other hand, the first supercomputer that used a commercial access microprocessor, the CRAY-1, also appeared. The first unit was installed at the Los Alamos National Laboratory. Later another 80 were sold.

Among the minicomputers, the PDP-11 stood out for its permanence in the market. This model had appeared during the previous generation, prior to microprocessors, but its acceptance caused it to be adapted so that those components were installed.

The Altair 8800 was marketed in 1975 and was noted for incorporating the Basic language out of the box. This computer featured the Intel 8080, the first 17-bit microprocessor. His bus, the S-1000, became the standard for the next several years..

Part of the success of this latest model was due to the fact that it was marketed together with a keyboard and mouse..

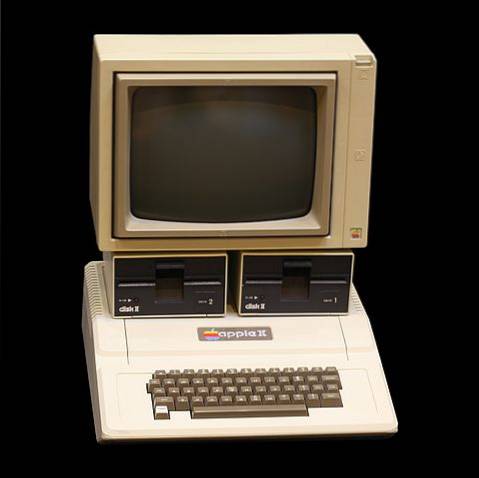

In 1977 the Apple II appeared, which was sold with great success for seven years. The original model had a 6502 processor, 4 KiB of RAM and an 8-bit architecture. Later, in 1979, the company introduced the Apple II Plus, with increased RAM..

Fifth generation

For some authors, the fifth generation of computers began in 1983 and continues to the present day. Others, on the other hand, keep the start date but claim that it ended in 1999.

Story

The fifth generation of computers got its start in Japan. In 1981, that Asian country communicated its plans to develop intelligent computers that could communicate with human beings and recognize images.

The plan presented included updating the hardware and adding operating systems with artificial intelligence..

The Japanese project lasted for eleven years, but without getting the results they wanted. Finally, computers only evolved within existing parameters, without artificial intelligence being able to be incorporated.

Despite that, other companies keep trying to get artificial intelligence to be incorporated into computers. Among the ongoing projects are those of Amazon, Google, Apple or Tesla.

The first step has been made in smart home devices that seek to integrate all activities in homes or autonomous cars.

In addition, another of the steps that is intended to be taken is to give the machines the possibility of self-learning based on the experience acquired.

Apart from these projects, during the fifth generation the use of laptops or laptops became widespread. With them, the computer was no longer fixed in a room, but can accompany the user to be used at all times.

Characteristics

The Japanese project to build more advanced computers and the manufacture of the first supercomputer that worked with parallel processes marked the beginning of the fifth generation.

From then on, computers were able to perform new tasks, such as automatic language translation. Likewise, information storage began to be measured in gigabytes and DVDs appeared..

Regarding the structure, the fifth generation computers integrated into their microprocessors part of the characteristics that were previously in the CPUs.

The result has been the emergence of highly complex computers. Furthermore, the user does not need to have any kind of programming knowledge to use them: to solve highly complex problems, it is enough to access a few functions..

Despite that complexity, artificial intelligence is not yet built into most computers. There have been some advances in communication through human language, but self-learning and self-organization of machines is something that is still developing.

On the other hand, the use of superconductors and parallel processing allows all operations to be carried out at a much higher speed. In addition, the number of simultaneous tasks that the machine can handle has grown a lot..

Main models

The defeat of world chess champion Gary Kasparov to a computer in 1997 seemed to confirm the advance of these machines towards human-like intelligence. Its 32 processors with parallel processing could analyze 200 million chess moves per second..

IBM Deep Blue, the name of that computer, had also been programmed to perform calculations on new drugs, search large databases and be able to make the complex and massive calculations that are required in many fields of science..

Another computer that took on humans was IBM's Watson. In this case, the machine defeated two champions from the US television show Jeopardy..

The Watson was equipped with multiple high-power processors that operated in parallel. This allowed him to search within a huge autonomous database, without being connected to the internet..

In order to deliver that result, Watson needed to process natural language, perform machine learning, reason about knowledge, and perform deep analysis. According to experts, this computer proved that it was possible to develop a new generation that would interact with humans.

Sixth generation

As noted above, not all experts agree on the existence of a sixth generation of computers. For this group, the fifth generation is still in use today.

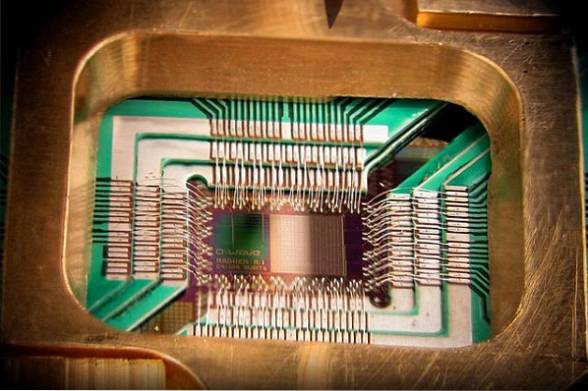

Others, on the other hand, point out that the advances that are now being made are important enough for them to be part of a new generation. Among these investigations, the one that is developed on what is considered the future of computing stands out: quantum computing.

History and characteristics

Technology research has been unstoppable in recent years. In the realm of computers, the current trend is to try to incorporate neural learning circuits, a kind of artificial "brain". This implies the manufacture of the first smart computers.

One of the keys to achieve this in the use of superconductors. This would allow a large reduction in electricity consumption and, therefore, less heat generation. The systems would thus be almost 30 times more powerful and efficient than the current ones..

New computers are being built with a vector architecture and computers, as well as specialized processor chips to perform certain tasks. To this must be added the implementation of artificial intelligence systems.

However, experts believe that much more research still needs to be done to achieve the goals. The future, according to many of those experts, will be the development of quantum computing. This technology would definitively mark the entrance to a new generation of computers.

Quantum computing

The most important technology companies, such as Google, Intel, IBM or Microsoft, have been trying to develop quantum computing systems for a few years..

This type of computing has different characteristics from classical computing. To begin with, it is based on the use of qubits, which combines zeros and ones instead of bits. The latter also use those numbers, but they cannot be presented at the same time.

The power offered by this new technology will make it possible to respond to hitherto unsolvable problems.

Featured Models

The company D-Wave System launched in 2013 its quantum computer D-Wave Two 2013, considerably faster than conventional ones and with a computing power of 439 qubits..

Despite this advance, it was not until 2019 that the first commercial quantum computer appeared. It was the IBM Q System One, which combines quantum and traditional computing. This has allowed it to offer a 20 qubit system, intended to be used in research and large calculations..

On September 18 of the same year, IBM announced that it planned to launch a new quantum computer soon, with 53 qubits. When marketed, this model would become the most powerful in the commercial range.

References

- Next U. The history of the computer generation. Retrieved from nextu.com

- Gomar, Juan. The generations of computers. Obtained from profesionalreview.com

- Wheat Aranda, Vicente. The generations of computers. Recovered from acta.es

- Business to Business. The five generations of computers. Obtained from btob.co.nz

- Beal, Vangie. The Five Generations of Computers. Retrieved from webopedia.com

- McCarthy, Michael J. Generations, Computers. Retrieved from encyclopedia.com

- Nembhard, N. The Five Generations of Computers. Recovered from itcoursenotes.webs.com

- Amuno, Alfred. Computer History: Classification of Generations of Computers. Retrieved from turbofuture.com

Yet No Comments